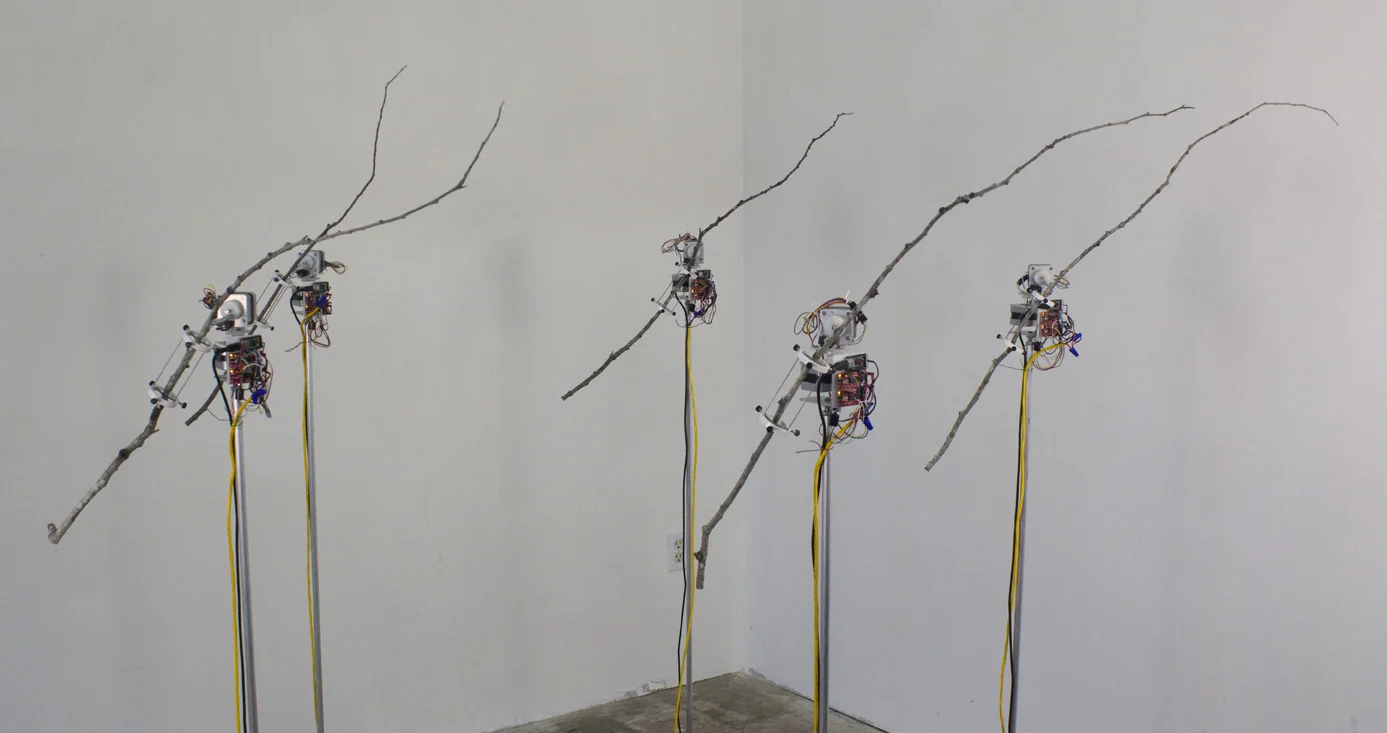

the two

is it possible for robots to fall in love?

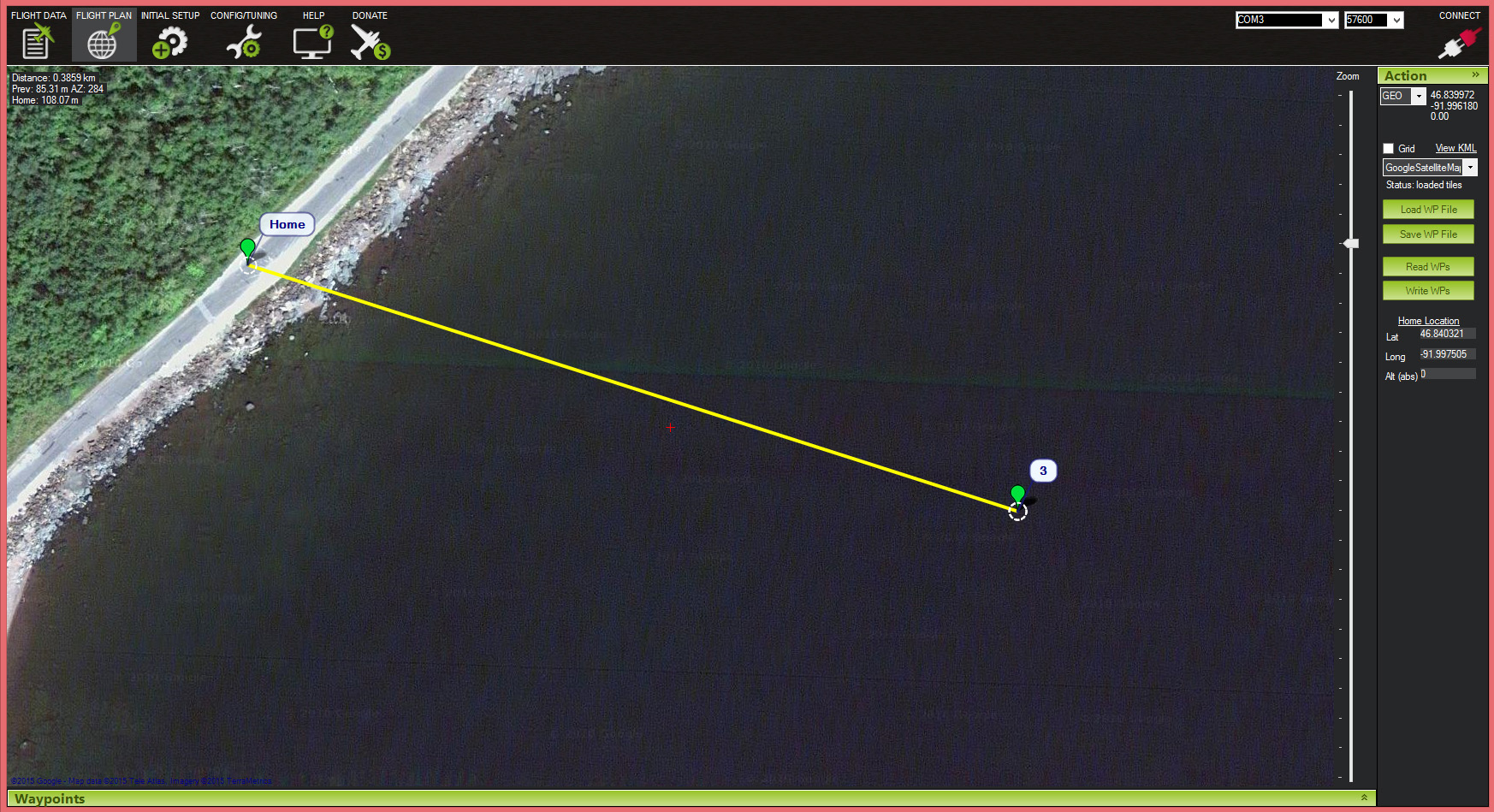

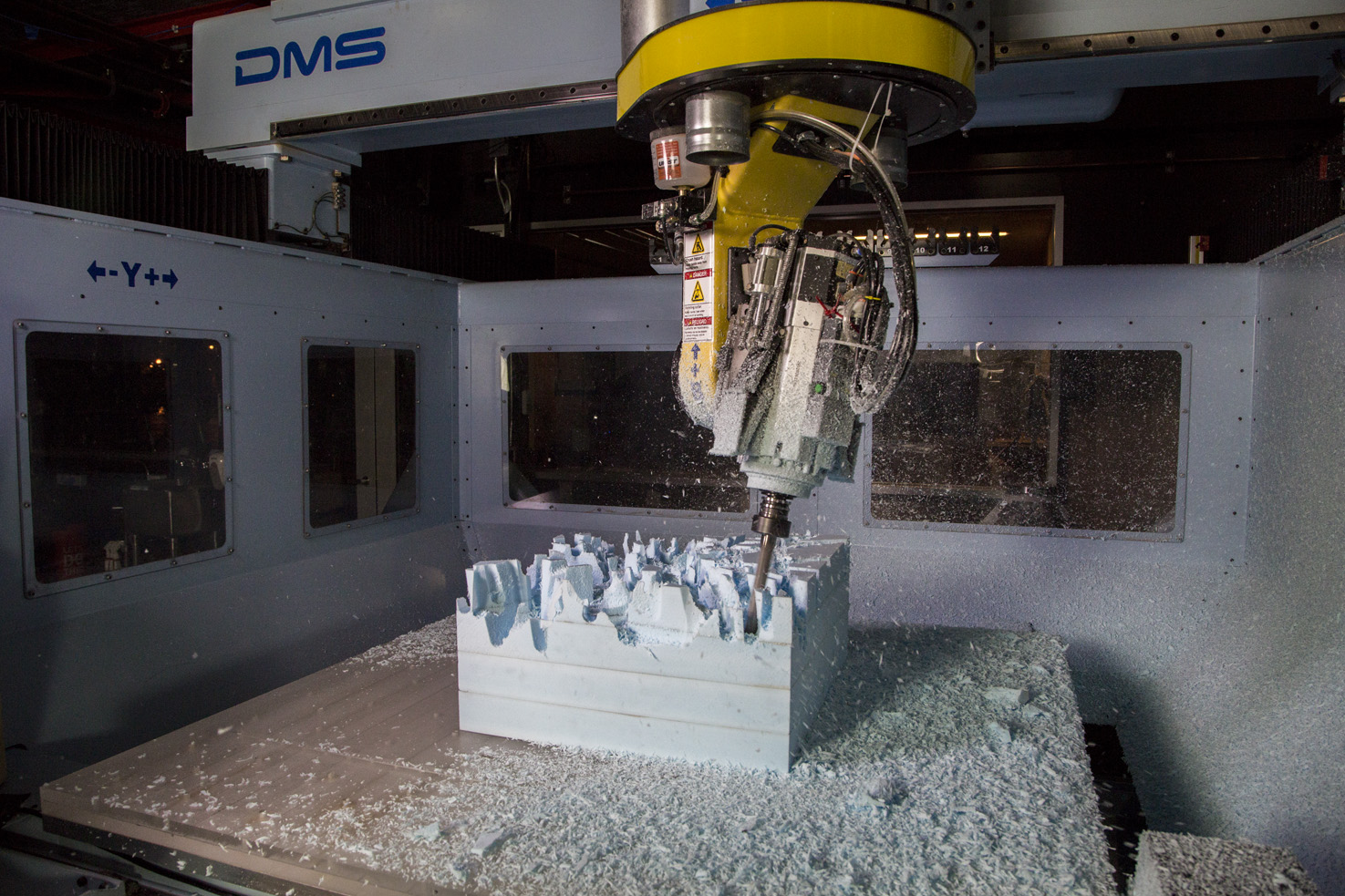

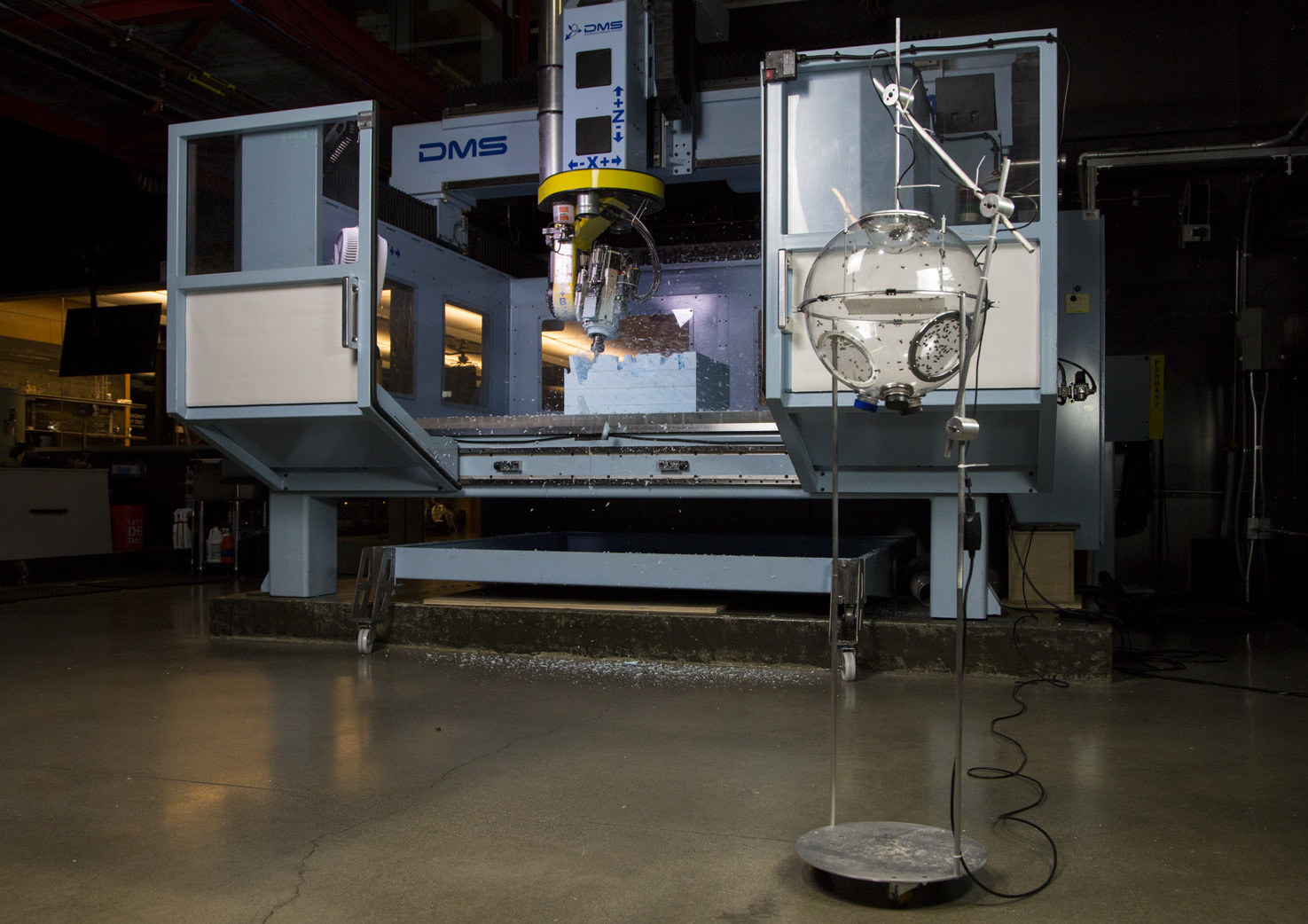

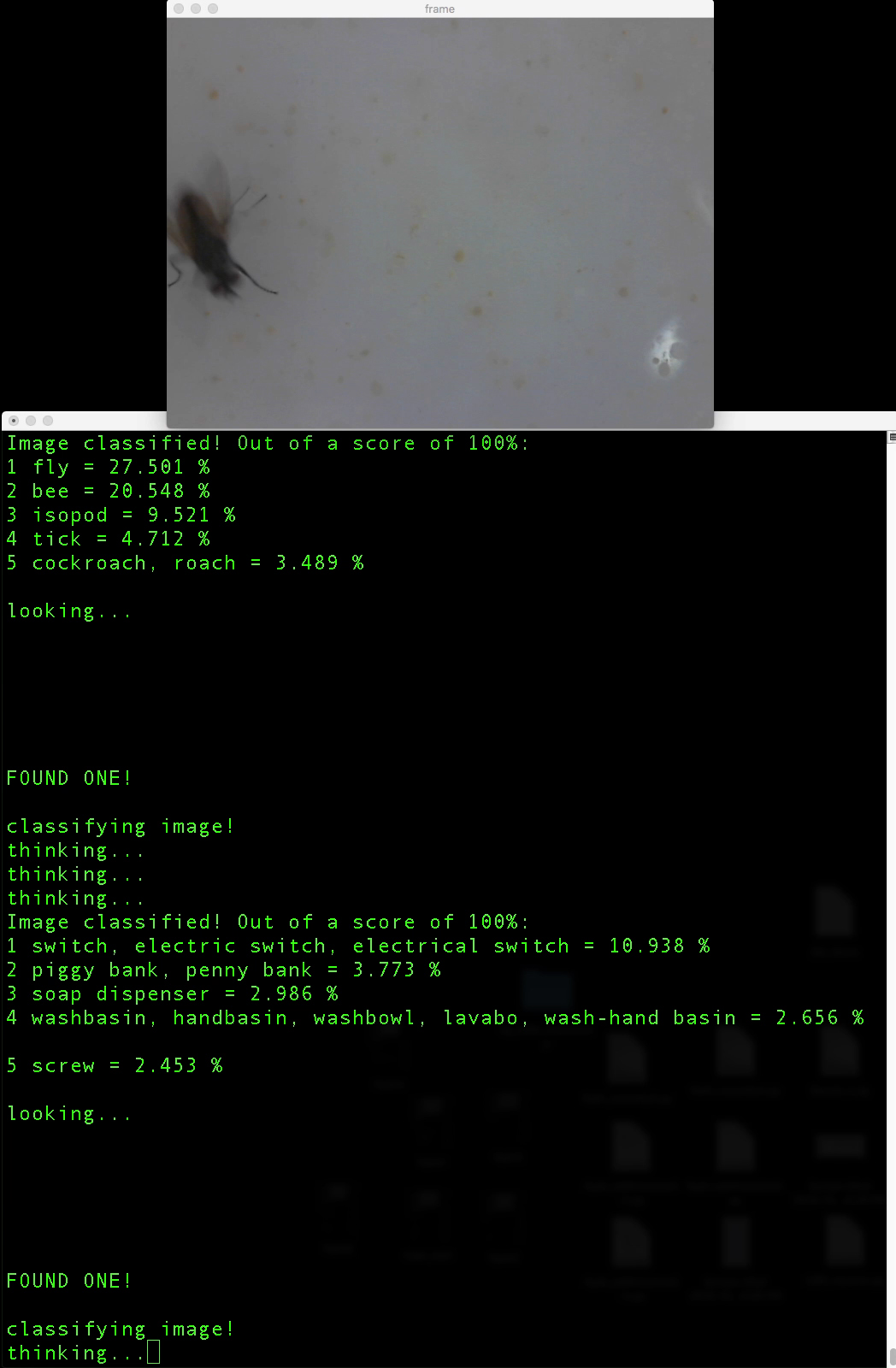

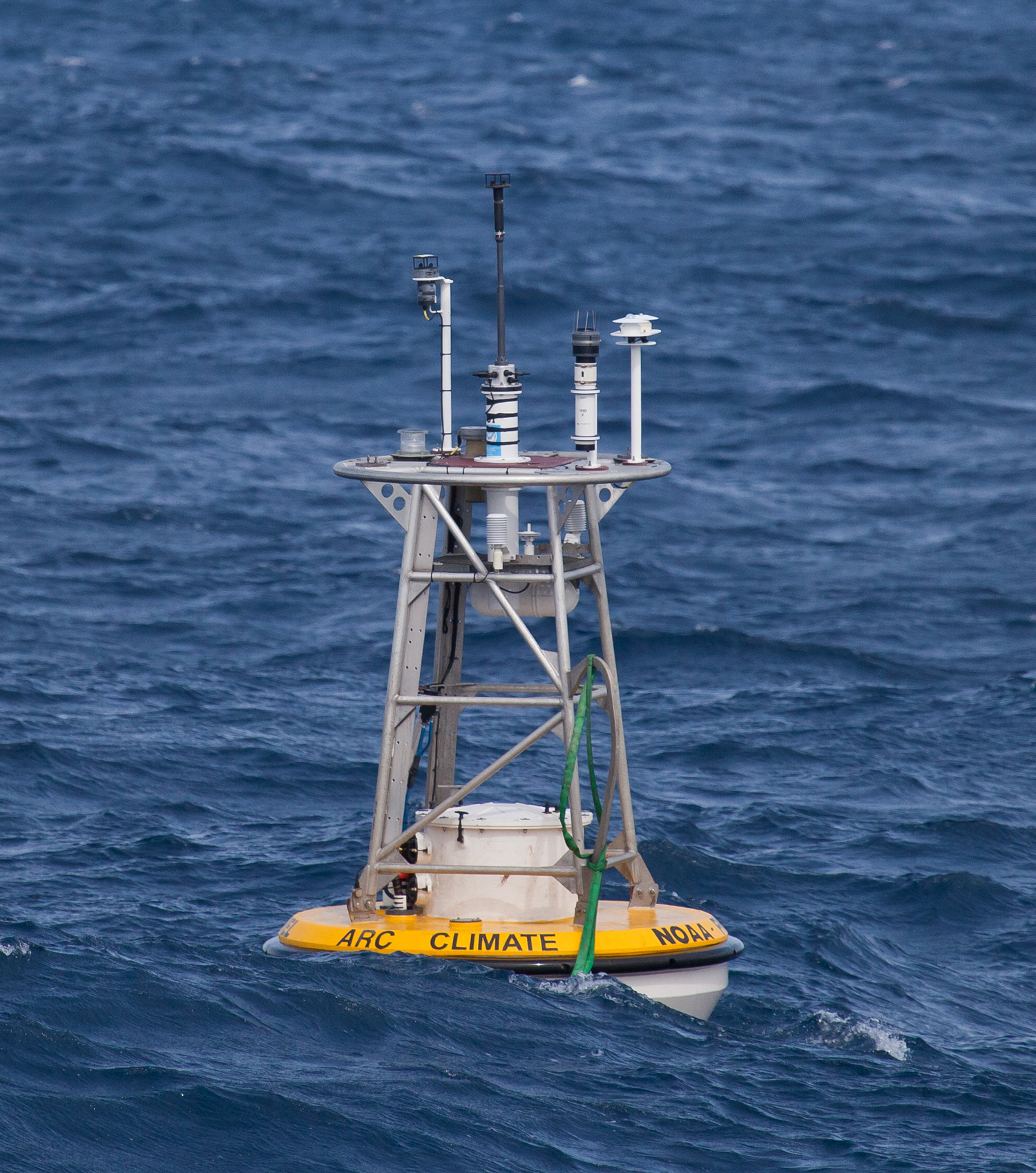

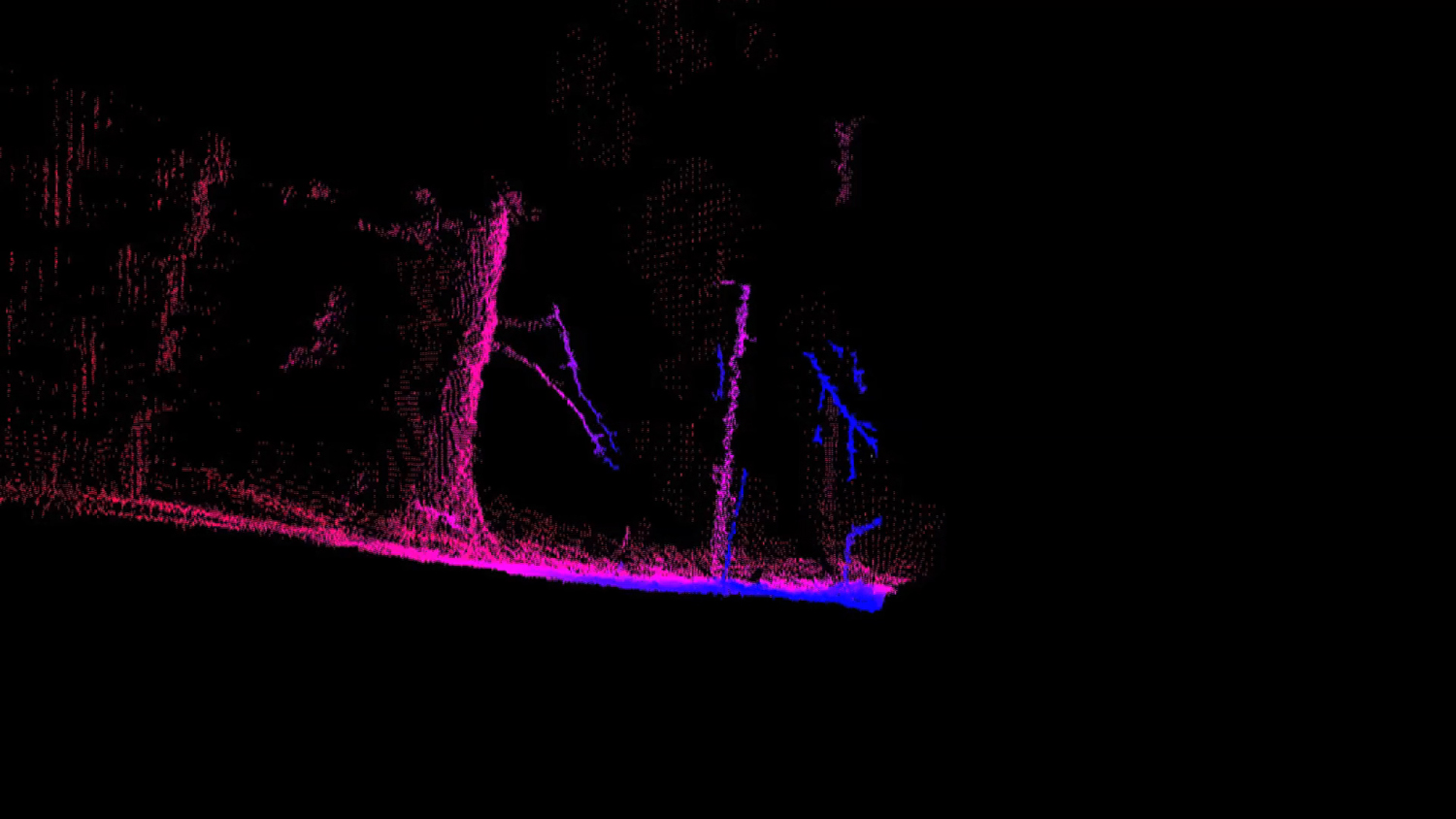

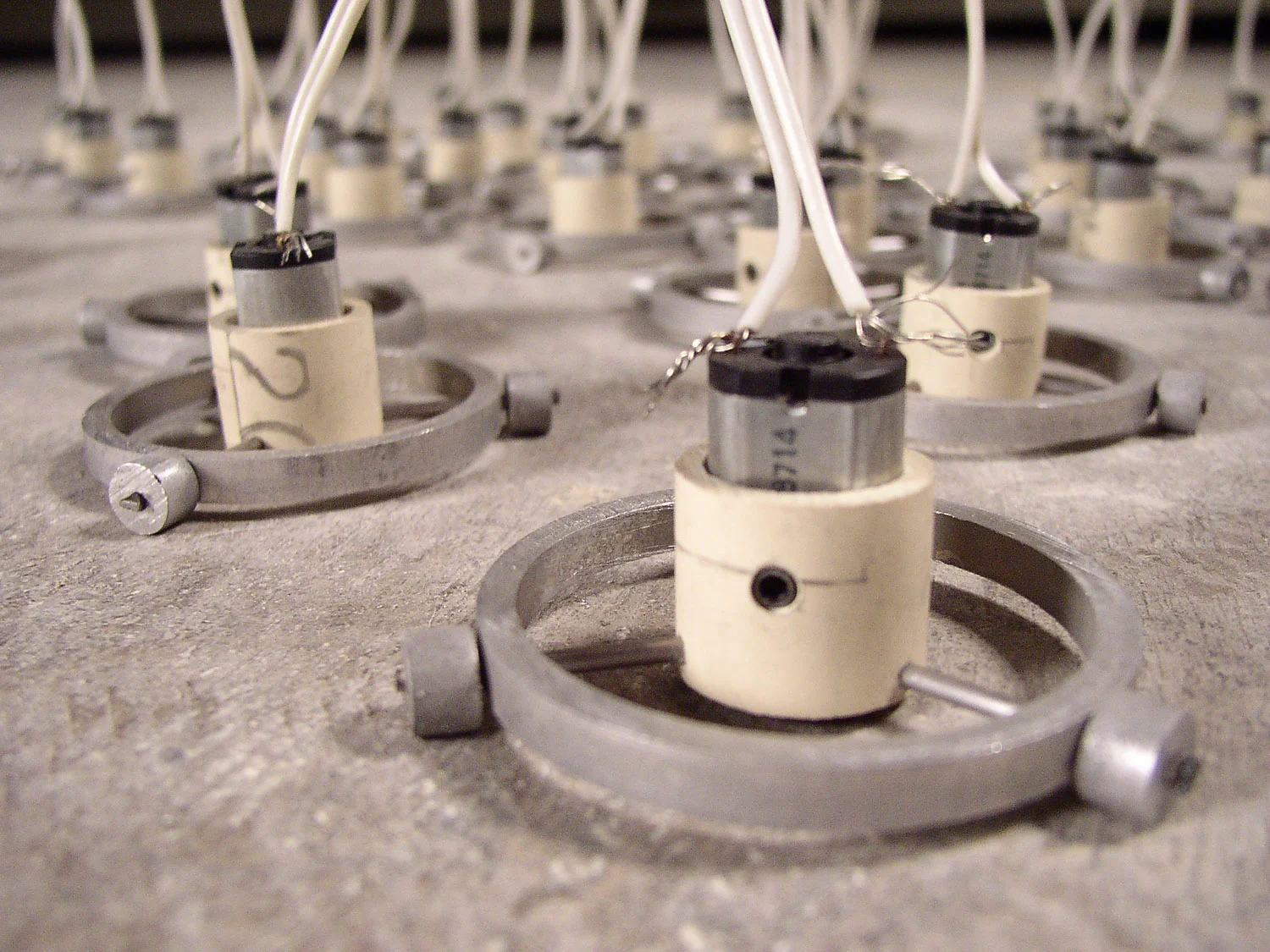

This installation consists of two identical robotic arms with cameras connected to two identical computers. Each computer is running a custom deep neural network that is trained to recognize the other robot. Using their cameras, the robots attempt to find and track each other as they move independently. Playfully dancing, the robots at times are attracted to each other. While at other times they seem repelled by their mate. Tension increases as they almost touch only to quickly pull away. If one of the robots does not see the other it will go to a resting position briefly before it begins to look for its counterpart again. When a robot positively identifies it’s mate a given number of times, the network is re-trained based on the new data. Through this continual training and re-training, the robots conceivably increasing their proficiency at recognizing and finding one another. In this way, as they lock onto each other’s loving gaze the robots become more and more familiar with their mates.